Table of Contents

Table of Contents

- What Is NIST SP 800-30?

- NIST SP 800-30 Risk Assessment Process

- NIST SP 800-30 vs Other NIST Frameworks

- Challenges with NIST SP 800-30

- NIST SP 800-30 Step-by-Step Guide

- NIST SP 800-30 Best Practices

- How to Simplify NIST 800-30 with Isora GRC

-

NIST SP 800-30 FAQs

- How long does it take to complete a NIST SP 800-30 risk assessment?

- Can NIST SP 800-30 assessments be automated?

- When should I move from spreadsheets to a dedicated risk assessment tool for NIST SP 800-30?

- How can I scale SP 800-30 across multiple business units or subsidiaries?

- How does NIST SP 800-30 integrate with third-party risk management programs?

- What risk metrics should I track for visibility across all risk assessments?

- How do I align NIST SP 800-30 with ISO/IEC 27001?

- How do I sync risk assessments to Jira, ServiceNow, or other remediation tools?

- NIST SP 800-30 Key Terms

Most security leaders know NIST SP 800-30 by name, but few use it with confidence. The document is dense, written for broad applicability and built on a threat-built model that’s difficult to keep up across large, complex environments. For many, that makes 800-30 more of a theoretical reference than a working framework.

Yet, the value of SP 800-30 becomes clear through a control-based lens. Here, instead of modeling every possible threat, teams can focus on identifying, verifying, and measuring the real impact of missing controls. The result is faster assessments, more consistent scores, and risk data that’s easier for business leaders to act on.

This guide explains how to implement a control-based approach to NIST SP 800-30 risk assessments – and how a tool like Isora GRC can help simplify that process at scale.

What Is NIST SP 800-30?

NIST SP 800-30 Rev. 1 is a cybersecurity risk assessment guide. It outlines a flexible, structured, repeatable process for identifying, evaluating, ranking, and addressing information security risks.

NIST SP 800-30 defines a four-step model for conducting risk assessments:

- Prepare for the assessment

- Conduct the assessment

- Communicate the results

- Maintain the assessment over time

NIST SP 800-30 is not required by law, but it is highly recommended. It’s one of the most adaptable frameworks available and can be tailored to different organizational sizes, missions, and security postures alike.

Today, SP 800-30 serves as a trusted IT security risk assessment baseline for public and private sectors worldwide. Still, many organizations remain unsure about when to actually use SP 800-30.

When Do You Use NIST 800-30?

NIST SP 800-30 is best for organizations that need to perform a structured, threat-based risk assessment. It’s especially useful when assessing complex systems, high-impact environments, or regulatory landscapes that require formal risk documentation.

Organizations typically use NIST 800-30 to:

- Support security authorization decisions under the Federal Information Security Modernization Act (FISMA)

- Evaluate risk across enterprise-wide environments, including hybrid or cloud-based systems

- Inform control selection or tailoring in conjunction with NIST SP 800-53

- Fulfill requirements from frameworks like the NIST Cybersecurity Framework (CFF), ISO/IEC 27001, or FedRAMP

- Establish a defensible methodology for identifying and prioritizing risks across business units

Because NIST SP 800-30 is flexible and non-prescriptive, it can be adapted to fit nearly any organization’s risk management process — across the public sector and private businesses alike.

However, this flexibility can also be a weakness for some. A closer look at how this framework is organized can help explain why.

How Is NIST 800-30 Organized?

NIST SP 800-30 is divided into a prologue, three chapters, and multiple appendices. Organized chronologically, NIST builds each section on the last to guide organizations through the entire risk assessment process, step by step.

The NIST 800-30 chapters are:

- Chapter One – Introduction: Describes the document’s purpose, audience, structure, and relationship to other NIST publications.

- Chapter Two – The Fundamentals: Defines key concepts like risk, threat, vulnerability, and impact, and introduces the idea of risk framing.

- Chapter Three – The Process: Explains the four-step risk assessment model – prepare, conduct, communicate, and maintain.

The supporting Appendices contain sample threat sources, risk scales, assessment use cases, and implementation tips, but are more high-level than prescriptive. For some, that flexibility is beneficial. But, more often than not, it can actually make NIST SP 800-30 implementation more challenging for teams.

An overview of the NIST SP 800-30 risk assessment process can help explain why.

NIST SP 800-30 Risk Assessment Process

The NIST SP 800-30 risk assessment process outlines four steps to help organizations identify, analyze, and manage cybersecurity risks: prepare, conduct, communicate, and maintain. Each step builds on the last to help organizations translate raw system data into actionable risk decisions.

The four steps of the NIST SP 800-30 risk assessment process are:

- Prepare for Assessment

- Conduct Assessment

- Communicate Results

- Maintain Assessment

NIST 800-30 defines each step of this process in more detail throughout Chapter Three. However, even with additional explanation, many organizations still find the framework’s guidance irrelevant or vague.

Here’s exactly what each step of an 800-30 risk assessment entails, according to NIST.

Step 1 – Prepare for Assessment

The first step of NIST SP 800-30 is to prepare for the risk assessment. That includes establishing purpose and scope, assumptions and constraints, information sources, and more.

According to NIST SP 800-30, to prepare for a risk assessment, organizations should:

Define purpose and scope. State why the assessment is being conducted and which systems, units, or environments it will cover.

State assumptions and constraints. Identify any known conditions that could influence results (e.g., resource limits, shared controls, incomplete data).

Identify information sources. Select the data and inputs that will inform risk analysis (e.g., threat intelligence, system documentation, or expert input).

Select a risk model and analytic approach. Document the method to assess risk (e.g., threat x vulnerability x impact), likelihood, and impact (e.g., qualitative or quantitative scales).

Establish outputs and decision criteria. Define how risks will be rated, categorized, and used to support organizational decision-making.

The goal of this step is to establish expectations around how the risk assessment will be conducted and how its results will inform organizational risk decisions.

Step 2 – Conduct Assessment

The second step in the NIST SP 800-30 risk assessment process is to conduct the assessment. That includes identifying relevant threats, known vulnerabilities, environmental factors, and the potential impact of adverse events.

According to NIST SP 800-30, to conduct a risk assessment, organizations should:

Identify threat sources and threat events. Determine which types of threats are relevant (e.g., adversarial, environmental, accidental) and what specific events they might trigger.

Identify vulnerabilities and predisposing conditions. Pinpoint the weaknesses that could be exploited and any system or organizational conditions that could increase risk.

Assess likelihood and impact. Estimate the probability that a threat event will occur and the expected impact on operations, assets, or individuals.

Document risk determinations. Record the results of the assessment, including the rationale behind each likelihood and impact rating.

The goal of this step is to analyze how threats, vulnerabilities, and impacts interact and to produce a set of traceable, risk-informed findings.

Step 3 – Communicate Results

The third step of NIST SP 800-30 is to communicate the results of the risk assessment. That includes documenting the findings, tailoring them to different audiences, and supporting decision-making along the way.

According to NIST SP 800-30, to communicate the results of the assessment, organizations should:

Prepare assessment reports. Summarize the purpose, scope, methodology, findings, and conclusions of the assessment, including identified risks and the reasoning behind likelihood and impact ratings.

Tailor communication to stakeholders. Present the results in ways that are appropriate for the intended audience (e.g., high-level summaries for executives, detailed data for technical teams).

State confidence levels and limitations. Document the level of confidence in assessment results and any known gaps or assumptions that could affect interpretation.

Ensure traceability and repeatability. Make sure all risk determinations can be traced back to the data and analysis that produced them to support reassessments, audits, and reviews.

The goal of this step is to make sure risk information is accurate, transparent, and ready to inform follow-up decisions.

Step 4 – Maintain Assessment

The fourth step of NIST SP 800-30 is to maintain the assessment. This step focuses on keeping risk data current as systems, threats, and business conditions change.

According to NIST SP 800-30, to maintain the risk assessment, organizations should:

Monitor for change. Track environmental, technological, and organizational changes that could affect risk posture (e.g., new systems, evolving threats, updated regulatory requirements).

Reassess risk over time. Conduct follow-up assessments at regular intervals or in response to trigger events (e.g., incidents, major system changes, policy updates).

Update risk data and ratings. Adjust likelihood, impact, and risk determinations based on new information, validated control changes, or shifts in system usage.

Support continuous improvement. Integrate risk assessments into security and governance workflows to keep decisions well-informed and aligned with reality.

The goal of this step is to treat risk assessment as a living process and one that adapts to change, reflects current conditions, and supports long-term accountability.

NIST SP 800-30 vs Other NIST Frameworks

NIST SP 800-30 is one of several guidelines that help organizations manage information security risk developed by the National Institute of Standards and Technology (NIST). On its own, SP 800-30 outlines a process for identifying and analyzing cybersecurity risk. But it also fits into a broader ecosystem of NIST frameworks.

Together, these guidelines define how, when, and why risk assessments should happen, and what they should evaluate. In other words, when combined strategically, they can form a complete risk management program.

NIST frameworks for information security risk management include:

- NIST SP 800-39 defines risk strategy and tolerance at the enterprise level.

- NIST SP 800-37 defines when to assess risk and how the results feed into system authorization and monitoring.

- NIST SP 800-53 defines what to assess (i.e., specific controls and control families)

- NIST CSF (Cybersecurity Framework) links those efforts to business outcomes and strategic priorities.

Each NIST publication serves a specific purpose and meets different requirements. For instance, NIST SP 800-53 is mandatory for federal agencies under FISMA (Federal Information Security Modernization Act), but NIST SP 800-30 is not.

Unfortunately, a lack of alignment across NIST frameworks often makes them challenging to adopt and maintain. For many organizations, implementing SP 800-30 can be especially tricky.

Challenges with NIST SP 800-30

NIST SP 800-30 is challenging to scale because it uses a threat-based model. Let’s take a closer look at exactly what that means.

NIST defines risk as: Risk = Threat Source → Threat Event → Vulnerability → Predisposing Conditions → Impact

NIST SP 800-30 requires teams to identify potential threat sources, define the specific threat events those sources might trigger, map known vulnerabilities, account for environmental conditions, and estimate the resulting impact – all before documenting any actual risk.

The process might look simple on paper. But in practice, this level of threat modeling takes time, coordination, and documentation that most organizations just can’t sustain long-term. Persistent issues tend to arise as a result.

Here are five common challenges with implementing NIST SP 800-30.

Threat-Event Mapping Breaks Down at Scale

Teams using SP 800-30 must identify threat sources and map them to specific threat events for each system. But modeling dozens of potential scenarios across environments, business units, and vendors is unmanageable without centralized context or automation.

Vulnerabilities Aren’t Based on Verified Control Status

The framework asks teams to identify vulnerabilities, but doesn’t explain how to verify whether security controls are actually in place. Without a structured approach for gathering control evidence, teams that rely on guesswork or outdated documentation often miss what’s really at stake.

Risk Scoring Isn’t Consistent Across Teams

Without a solid definition from NIST, what makes a risk “high,” “moderate,” or “low” is often left up to teams. Different groups apply different thresholds and assumptions as a result, and one group’s high risk might be another group’s low. That makes it harder to compare risk across systems, justify decisions, or prioritize remediation efforts at scale.

Evidence Collection Is Slow and Inconsistent

Most teams still rely on spreadsheets, emails, and manual follow-ups to gather control implementation data. But spotting gaps, confirming accuracy, or tracking responses is difficult when each one has a different format with varying levels of detail and documentation across multiple systems and teams.

Risk Registers Don’t Support Decision-Making

Too often, risk entries exist in abstract terms like “unauthorized access” or “data loss” with no link to a specific control gap, affected system, or responsible owner. Yet, without that context, teams can’t prioritize remediation, assign accountability, or monitor how risk fluctuates over time.

NIST SP 800-30 becomes manageable when teams stop modeling every possible threat and start assessing the actual status of security controls.

A control-based approach replaces assumptions with real implementation data, so risks are defined based on what’s missing, who’s responsible, and how systems are actually configured.

Threat-Based Models vs. Control-Based Models

Threat-based risk assessments begin with hypothetical scenarios, while control-based assessments begin with the actual state of security controls. Both aim to measure cybersecurity risk, but they rely on fundamentally different inputs to work.

In threat-based models like NIST SP 800-30, teams identify possible threat scenarios, map them to threat events, evaluate known vulnerabilities, and estimate the likelihood and impact. This approach is flexible and thorough, but difficult to scale without extensive modeling and data collection.

In control-based models, teams verify whether controls are implemented and use the absence of controls as an indicator of risk. This approach is grounded in reality and makes the process faster to execute, easier to scale, and more consistent across systems and teams.

Control-based risk assessment models make it possible to:

- Work across frameworks without rebuilding threat models for every system

- Use verified control data instead of assumptions about threat likelihood

- Run consistent, defensible assessments across departments, vendors, and business units

Instead of modeling every possible threat, teams assess what controls are missing and evaluate the risk based on the potential consequences. The outcome is the same: likelihood and impact, but based on real data and easier to apply organization-wide.

The truth is, most teams today already know where the exposures are. What they need now is a practical way to turn those gaps into risk-based decisions that are traceable, measurable, and repeatable across every step.

Mapping NIST Concepts to Control-Based Practice

A threat-first model like NIST SP 800-30 calculates risk by starting with hypothetical scenarios and working toward potential impact. But a control-based approach flips that logic around. Instead of modeling every possible threat, teams begin with observable control gaps and use system context to predict, not guess, what could go wrong.

Here’s how NIST SP 800-30 maps to control-based models in practice.

| NIST SP 800-30 | Control-Based Risk Models |

| Threat Source, Threat Event, & Vulnerability | Implied by missing or weak controls |

| Predisposing Conditions | Pulled from system metadata |

| Security Control | Object of assessment |

| Likelihood/Impact | Scored using control gap severity and system exposure |

| Risk | Structured as a gap-based entry in a risk register |

| Risk Response | Documented via remediation plans and exceptions |

Control-based risk assessments follow the same four-step process as NIST SP 800-30. However, instead of relying on assumptions about threats, each step is driven by actual implementation data, and every risk has a source, a scope, and a clear path forward from the start.

NIST SP 800-30 Step-by-Step Guide

NIST SP 800-30 defines a four-step process for assessing risk: prepare, conduct, communicate, and maintain. A control-based approach follows the same structure, but replaces abstract threat modeling with observable control gaps, system context, and real implementation data.

Here’s how to conduct NIST SP 800-30 risk assessments using a control-first lens.

Step 1 – Prepare for the Assessment

Preparation involves defining the purpose, scope, roles, methods, and assumptions that will guide the risk assessment from start to finish. In a control-based model, this step lays the foundation for collecting consistent, actionable data and evaluating risk in context, not theory. At a high level, this is where teams decide:

- What to assess

- How to collect evidence

- How to interpret and apply results

It’s also where teams establish a shared risk frame: a clear definition of how risk will be measured, scored, and communicated across the organization. This includes setting parameters like:

- Risk tolerance

- Business priorities

- Resource or data constraints

- Tradeoffs between depth, speed, and coverage

These choices directly impact how the assessment will be scoped, scored, and communicated.

The preparation phase includes four substeps.

Define the Scope

A clearly defined scope keeps the assessment focused, traceable, and aligned with organizational needs. To define the scope:

Identify the purpose. Why is this assessment being conducted? Examples include annual compliance review, vendor evaluation, or internal control validation.

List in-scope entities. Identify all systems, vendors, and business units to be assessed. For each one, document:

- System names and business functions

- Types of data involved (e.g., PII, PHI, CUI)

- Ownership and operational boundaries

Document exclusions. List any assets or environments that are out of scope and why (e.g., owned by another entity, covered in a separate audit).

Establish constraints and assumptions. Note any known limitations (e.g., time-boxed deadlines, incomplete documentation, inherited controls from shared infrastructure).

Select risk model components. Define the likelihood and impact scales to be used (e.g., High/Medium/Low) and how they’ll be scored.

Choose the control framework. Select the framework(s) to guide the evaluation (e.g., NIST SP 800-53, NIST CSF, CIS Controls, HIPAA). If using a tailored or hybrid control set, document how it was created and which entities it applies to.

Map controls to assessment targets. Use system metadata (e.g., cloud-hosted, internet-facing, data criticality) to assign relevant controls to each asset or vendor. Control assignments should be role-based and business-driven.

Document rationale. Record the reasoning behind each control mapping for transparency and future audits.

Obtain formal approval. Secure sign-off from an executive sponsor or risk acceptance authority to validate and confirm support for execution.

Establish Governance and Roles

Clear roles and ownership reduce delays and improve participation across the organization. To establish governance:

Use a RACI matrix. Define who is Responsible, Accountable, Consulted, and Informed at each stage of the process.

Assign an executive sponsor. Typically, a VP, director, or business unit lead with the authority to accept risk and allocate resources.

Designate an assessment lead. Someone who owns scheduling, coordination, and blocker resolution.

Identify control owners and evidence providers. These are the individuals or teams responsible for answering questionnaires and providing documentation.

Assign reviewers or risk analysts. These individuals validate responses and apply the risk model in later steps.

Set cadence and escalation paths. Schedule regular reviews and define how non-responses or blockers will be escalated.

Establish an Asset Inventory

Teams must understand the environment being assessed before assigning controls or evaluating risk. NIST calls this “characterizing the environment.” To build a useful inventory:

Compile a list of systems and vendors. Pull from CMDBs, procurement records, cloud inventories, or internal spreadsheets.

Categorize each asset. For each system or vendor, capture:

- Business function

- System type (e.g., application, database, SaaS, third party)

- Data classification (e.g., public, confidential, regulated)

- Business criticality (e.g., Critical, High, Moderate, Low)

Document predisposing conditions. Record any environmental or architectural details that influence risk likelihood, including:

- Network exposure (e.g., internet-facing, segmented)

- Administrative control mode (e.g., internal team, MSP-managed)

- Patching frequency or backlog

- Backup or redundancy status

Create a structured register. Store all asset data in a centralized, filterable format. This will be used to assign controls, route evidence collection, and support risk scoring.

Select Evidence Collection Methods

NIST SP 800-30 doesn’t define how to gather data, but it does expect assessments to be supported by credible, sufficient information. Choosing the right method up front is essential for repeatability, audit readiness, and scale.

Structured questionnaires are the most scalable and consistent method for control-based assessments available today. Benefits include:

- Scalability: Assess dozens of systems and vendors in parallel, without interviews or direct access

- Consistency: Send the same questions to every respondent and reduce interpretation errors

- Audit readiness: Include attached documentation (e.g., screenshots, logs, policies) in responses for traceability

Other evidence collection methods include:

Automated evidence collection: Tools like Vanta or Drata pull configuration data from cloud platforms or endpoints.

- Pros: Efficient for technical controls (e.g., encryption settings, access policies)

- Cons: Limited platform support, often requires elevated access, and unsuitable for vendors or hybrid environments

Manual document review: Useful for process-based controls, but slow and unstructured.

Interviews or workshops: Best for new systems or high-risk areas, but difficult to scale and prone to inconsistency.

Step 2 – Conduct the Assessment

Conducting the assessment means gathering evidence, analyzing control implementation, and evaluating risk. In a control-based model, this work is driven by structured questionnaires, asset context, and system-level implementation data, not assumptions about threats. At a high level, this step answers three questions:

- What’s actually implemented?

- Where are the gaps?

- What could go wrong as a result?

NIST SP 800-30 defines this phase as identifying threats, vulnerabilities, predisposing conditions, and estimating the likelihood and impact of adverse events. In practice, that means using real-world control status to model risk in a structured, repeatable way.

This phase includes four substeps.

Distribute Questionnaires and Collect Evidence

Structured questionnaires are the backbone of a control-based risk assessment. Use them to assess control implementation across systems, vendors, and business units. To collect evidence at scale:

Send role-specific questionnaires. Tailor questions based on the control mappings and system metadata defined in step one.

Request implementation status and documentation. For each control, require one of the following response types:

- Fully implemented

- Partially implemented

- Not implemented

Supporting evidence (e.g., screenshots, policies, config logs) should be attached or linked.

Standardize answer types. Use clearly defined response scales (e.g., Yes, No, N/A) or a capability maturity model (e.g., 0-5), depending on the control set and assessment goals.

Clarify expectations. Define what qualifies as a valid response, what level of documentation is required, and the timeline for completion.

Track participation and coverage. Monitor submissions, follow up on missing responses, and flag known gaps in data or evidence quality.

Identify Control Gaps, Vulnerabilities, and Predisposing Conditions

After collecting evidence, analyze responses to surface gaps and identify system traits that affect the likelihood of risk. To identify risk conditions:

Review control status. Focus on controls marked “partially implemented” or “not implemented,” especially those without supporting documentation.

Confirm and validate gaps. Determine whether each gap reflects a true implementation issue or a documentation problem. Flag unclear responses for follow-up.

Document predisposing conditions. Record environmental or architectural factors that increase risk likelihood, including:

- Internet exposure

- Weak or shared administrative access

- Infrequent patching or maintenance

- Lack of monitoring, logging, or redundancy

Group any related findings. Where appropriate, consolidate similar findings (e.g., multiple systems missing audit logging) to stream analysis and modeling in the next step.

Analyze Risk Using Likelihood and Impact Ratings

Once identifying gaps and conditions, assess the risk using a structured scoring model. The fundamental question to answer is: if this control is missing, what could happen, and how bad would it be? To evaluate and score risks:

Link each control gap to a plausible threat event. For example:

- Missing access control → unauthorized access

- No logging → undetected compromise

- No backups → irreversible data loss

Estimate likelihood. Use exposure, predisposing conditions, threat intelligence, and historical data to score likelihood as High, Moderate, or Low. Also consider the potential consequences of a successful threat event:

- Data breach or confidentiality loss

- Service disruption or ransomware (availability)

- Data tampering or fraud (integrity)

- Regulatory penalties or reputational damage

Justify each rating. Document a short rationale for both likelihood and impact to support traceability and future audits.

Document and Prioritize Risks

Score each gap and enter it into a structured risk register for tracking, prioritization, and response planning. To document and rank risks:

Apply the risk matrix. Combine likelihood and impact ratings to assign a final risk level (High, Moderate, or Low), based on the scoring model established in step one.

Create structured register entries. For each risk register, capture:

- Risk title and short description

- Associated control gap

- Likelihood and impact scores

- Predisposing conditions

- Affected system, vendor, or business unit

- Assigned owner

- Date identified

Prioritize risks for response. Use the risk register to identify which issues require immediate attention and which can be addressed over time or accepted with documented rationale.

Step 3 – Communicate and Respond to Risk

After risks have been identified and scored, the next step is to decide what to do about them. In a control-based model, this means reviewing each risk entry for accuracy, assigning ownership, selecting a response, and making sure those decisions are carried through.

NIST SP 800-30 defines this step as communicating risks to stakeholders and identifying appropriate responses. In practice, it requires structure, documentation, and follow-through.

This phase includes three operational substeps.

Review and Validate the Risk Register

The risk register is only useful if its entries are accurate, complete, and assigned. This step is about making sure each risk is clearly defined, fully contextualized, and owned by someone with the authority to act. To review and validate the register:

Verify completeness. Each risk entry should include:

- Title and short description

- Linked control deficiency

- Likelihood and impact scores

- Predisposing conditions

- Affected system or vendor

- Assigned owner

- Date identified

Hold stakeholder review sessions. Schedule structured reviews with risk owners, department heads, and system leads, especially for High or Moderate risks tied to critical assets or regulated environments.

Validate assumptions and ratings. Encourage reviewers to challenge scoring, update context, and flag compensating controls or recent changes.

Confirm ownership. Every risk must have a designated point of contact responsible for coordinating the response and reporting status. No owner, no progress.

Select and Document Risk Responses

Every risk in the register needs a documented response. SP 800-30 outlines four risk response strategies: mitigate, accept, transfer, or avoid. To select and document risk responses:

Restate each risk clearly. Use plain language to summarize:

- What could go wrong

- Why it matters

- Which system or vendor would be affected

Choose a response type:

- Mitigate: Reduce the likelihood or impact by implementing or improving controls.

- Accept: Take no immediate action. Often appropriate for Low risks or well-understood issues with limited consequences.

- Transfer: Shift responsibility to a third party (e.g., insurance policy, vendor contract, SLA).

- Avoid: Discontinue the system, activity, or relationship causing the risk (e.g., decommission a legacy app).

Document rationale: Record why the chosen response makes sense – based on cost-benefit tradeoffs, existing controls, risk appetite, or operational constraints.

Assign action owners and deadlines. For mitigated or avoided risks, assign responsible teams and define expectations, including due dates and status tracking.

Communicate Decisions and Track Progress

Risk decisions don’t mean much if they’re not communicated or followed through. This step is about sharing decisions, embedding them into workflows, and monitoring them over time. To operationalize the response process:

Notify impacted teams. Make sure system owners, control implementers, and business leaders know what decisions were made and what’s expected of them.

Create work items. For risks requiring action, enter remediation tasks into project management tools (e.g., Jira, ServiceNow) with defined scope, deadlines, and success criteria.

Monitor via dashboards or reports. Track:

- Open risks by severity

- Progress on mitigation tasks

- Count and age of accepted risks

Set a risk review cadence: Revisit open and accepted risks regularly (i.e., quarterly) to reassess in light of new threats, system changes, or improved controls.

Step 4 – Maintain the Assessment

Maintaining a NIST SP 800-30 risk assessment means keeping risk data current as systems, threats, and controls change over time. In a control-based model, this involves monitoring for changes, reassessing controls, and embedding risk tracking into regular security operations.

NIST SP 800-30 defines this as the final step of the risk assessment process: ensuring that documented risks continue to reflect the organization’s actual operating environment.

This phase includes three operational substeps.

Monitor Risk Factors and Environmental Changes

Teams need ongoing visibility into the systems, controls, and external factors that shape risk. SP 800-30 emphasizes the importance of staying informed about both internal and external changes. To monitor change effectively:

Track system and asset changes. Monitor new systems, tools, or vendors added, decommissioned or repurposed assets, and changes to ownership or admin model (e.g., moved to a third party)

Track control changes. Monitor newly implemented, retired, or reconfigured controls, manual processes replaced with automation, and temporary workarounds made permanent.

Monitor data and usage changes. Monitor systems that start processing more sensitive data, availability requirements that suddenly increase (e.g., uptime SLAs), or excessive access across roles, teams, or vendors.

Monitor threat and vulnerability intel. Subscribe to ISACs, CVE feeds, and vendor bulletins, and adjust assumptions based on new tactics or threat actor behavior.

Respond to trigger events. Examples include:

- Compliance obligation changes (e.g., SOC 2, HIPAA)

- Security incidents or near misses

- System rebuilds, M&A activity, or key personnel turnover

Reassess Controls and Update the Risk Register

Risk scoring should reflect current conditions, not last year’s questionnaire. Reassessments validate whether controls are still implemented, risks are still relevant, and new issues have emerged. To reassess effectively:

Update assessment templates. Incorporate new controls or control families, add logic to capture new system traits or threat actors, and apply lessons from past assessments.

Redistribute assessments on a defined cadence.

- Annually for most systems

- Quarterly for high-risk or regulated systems

- Immediately after trigger events

Re-score risks using updated context.

- Downgrade risks where controls have improved

- Escalate risks where exposure has increased

- Close resolved entries with attached verification

Track historical changes. Document:

- Which risks were mitigated, accepted, or reclassified

- How likelihood and impact ratings shifted over time

- Which control gaps keep recurring, and where

Institutionalize Risk Monitoring as a Continuous Process

Long-term sustainability depends on turning risk maintenance into a regular part of operational cadence, not a once-a-year priority. To operationalize ongoing maintenance:

Run regular risk review cycles. Hold quarterly risk review meetings to review unresolved risks, track mitigation progress, reevaluate accepted risks, and approve response updates.

Integrate with business and IT workflows. Add risk checkpoints to:

- Change management and deployment pipelines

- Vendor onboarding and offboarding

- Annual budget and roadmap planning

Use tooling to maintain visibility. Dashboards or risk reports should show:

- Open risks by severity, system, or owner

- Mitigation status and overdue actions

- Accepted risks nearing expiration

- Risk assessment coverage across the org

Assign long-term ownership. Designate a GRC, Security, or Enterprise Risk team to maintain the risk register, trigger reassessments, coordinate updates with stakeholders, and monitor risk posture as a standing function, not an ad hoc task.

NIST SP 800-30 Best Practices

Implementing NIST SP 800-30 using a control-based approach makes it more scalable with better documentation, cleaner risk decisions, and closer alignment across teams. Nonetheless, getting a control-first 800-30 risk assessment right still takes some strategy.

To simplify the process, these best practices are designed to help teams avoid common challenges and build assessments that are actually useful from the start.

Start with a Pre-Mapped Control Set

Pre-mapping controls is one of the best ways to simplify NIST SP 800-30 risk assessments. Instead of assigning controls from scratch for each system, teams can start with a baseline that reflects their regulatory requirements, risk tolerance, and business priorities. Then, they can apply that baseline automatically using system metadata (e.g., data type, business function, environment, owner).

This best practice mirrors the ‘Select’ step of the NIST RMF (SP 800-37). Here, teams tailor a set of baseline controls based on system categorization to prepare for assessments downstream. Premapped controls can even help organizations better align with how federal risk programs are expected to function.

To implement this best practice, organizations can:

Choose the right control framework. Select a control framework that aligns with your needs, goals, and systems.

- NIST SP 800-53: Best for systems subject to FISMA, FedRAMP, the HIPAA Security Rule, or other strict requirements

- NIST CSF (Cybersecurity Framework): Best for outcome-oriented assessments that map across control sets

- CIS Controls: Best for a lighter-weight approach in smaller environments or early-stage risk programs

If needed, combine or tailor frameworks based on system type, data classification, or regulatory scope.

Tailor control sets by system context. Use asset inventory fields (e.g., data classification, criticality, system type) to assign the appropriate control families (e.g., NIST SP 800-53) to each system. A cloud-hosted system storing PHI, for instance, should inherit access control, encryption, and audit logging controls by default.

Document control tailoring decisions. Record exclusions and justifications (e.g., inherited controls, alternate implementations, non-applicability), and maintain mappings that show which controls apply to which systems, and why.

With pre-mapped control sets, teams can skip repetitive tasks like control selection and focus on gathering meaningful evidence instead. And, they can make it easier to scale, defend, and maintain risk assessments over time.

But to make it work, organizations will need more than spreadsheets, templates, and email inboxes. Instead, they need an IT risk management solution that can assign tailored control sets to each system, map them automatically using asset metadata, and track overrides and exceptions, all in one place.

Use Role-Based Questionnaires

A control-based approach works best when organizations use role-based questionnaires to collect evidence directly from the people responsible for each control. Role-based questionnaires streamline this process by asking only about the controls the respondents actually own. This can cut review time, shorten follow-up cycles, and reduce irrelevant or incomplete answers. To implement this best practice, organizations can:

Define respondent types. Identify the roles involved in the assessment (e.g., system owners, IT administrators, security engineers, third-party vendors). Each role should have a questionnaire tailored to its specific control responsibilities.

Segment questionnaires by control ownership. Align each questionnaire with a targeted subset of the control set. For example:

- Vendors: Data protection, access controls

- Application teams: Authentication, logging, secure development

- Infrastructure teams: Patching, network segmentation, encryption

Learn how to build an effective vendor risk management program in our complete guide.

Standardize language and scoring. Use the same structure, response scales, and evidence requirements across questionnaire types so reviewers can interpret results consistently.

Role-based questionnaires are one of the most efficient evidence-collection tools, but they aren’t the only option. In a control-based NIST SP 800-30 assessment – especially for third-party risk management – the right evidence collection method often depends on the control itself.

NIST SP 800-30 Evidence Collection Methods

Matching the collection method to the control is key to collecting accurate, defensible evidence. Some controls can be verified with a simple configuration screenshot from a system dashboard (e.g., password policy length in an identity provider). Others – personnel background checks, disaster recovery tests, or secure badge protection – require document review, in-person verification, or interactive testing.

However, pick the wrong method, and a control that looks compliant on paper may actually fail in practice. Here are the most common evidence collection methods with pros and cons for each.

| Technique | Best For | Advantages | Disadvantages |

| Role-Based Questionnaires | Management and operational controls; vendor assessments | Scales across large environments; low tech overhead; standardized | Relies on self-attestation; quality varies |

| Automated Collection (e.g., API/agent) | Cloud/SaaS configs, IAM settings | Objective, current data; continuous updates | Limited to API-accessible data; blind spots on legacy systems |

| Vulnerability & Config Scanning | Patch status, secure configuration | Objective, repeatable; directly maps to RA-5, SI-2 controls | Generates false positives; technical scope only |

| Pen Testing / Red Team | End-to-end control validation | Shows real-world exploitability and impact | Expensive; point-in-time |

| Document Review | Policies, procedures, diagrams | Critical for management controls; no tech requirements | Time-consuming; can be outdated |

| Interviews / Workshops | Complex or new processes | Captures nuance automation misses | Subjective; slower to execute |

| Direct Observation | Physical security, procedural checks | Verifies actual practice; uncovers shortcuts | On-site only; limited sampling |

Managing all of these inputs across controls, systems, and teams is slow and error-prone without automation. But scaling is much easier with a platform like Isora GRC instead. With Isora, teams can:

- Assign the right collection method to each control automatically

- Link evidence directly to control status and risk scoring

- Keep all responses, artifacts, and validation steps traceable from start to finish

But collecting evidence is only useful if it leads to clear, actionable insights. In a control-based NIST SP 800-30 assessment, the next best practice is to connect each confirmed gap to a documented risk.

Link Each Risk to a Control Gap

NIST SP 800-30 expects teams to document risk, but it doesn’t tell them what that process should actually look like in a modern, control-based model. Too often, risks are written in vague or generic terms like “unauthorized access” or “data breach” with no clear connection to implementation evidence or system context. To make risk actionable, each entry should be tied directly to a missing or weak control. To implement this best practice, organizations can:

Use control status as the trigger. Define risks based on controls marked as “Not Implemented” or “Partially Implemented” to give every risk a verifiable origin.

Write risks in control-aware language. Describe what’s at stake using the control gap itself. For example:

- “Multi-factor authentication not enforced for external users.”

- “No audit logging configured on privileged accounts.”

- “Patch management is manual and lacks documentation.”

Include system and asset context. Make sure each risk references the affected system, vendor, or environment, along with any relevant metadata, like data type or business criticality.

Unfortunately, most GRC tools aren’t designed to support this level of traceability. Instead, they tend to treat risks as static text fields, disconnected from control evidence or system metadata. To link risks directly to questionnaire responses, control status, and asset context, teams need a platform that turns incomplete or missing controls into structured risk entries, automatically and at scale.

A NIST SP 800-30 risk assessment tool like Isora GRC links every piece of evidence – from questionnaires, scans, or reviews – directly to each control it verifies. And, if one is missing or incomplete, Isora automatically flags it for risk analysis with the right context already attached.

Define Risk Review as a Governance Function

Risk reviews happen reactively in most organizations, triggered by audits, incidents, or compliance deadlines. But to keep a control-based NIST SP 800-30 process running, it needs to be structured into the organization’s governance calendar, with clear roles, review criteria, and expectations for follow-up. Only then will the risk register reflect current conditions rather than assumptions from a prior quarter. To implement this best practice, organizations can:

Establish a recurring review cycle. Hold risk review sessions at regular intervals (i.e., quarterly) to evaluate open risks, accepted risks, and risks that haven’t changed status since the last cycle.

Define scope and participants. Set rules for what gets reviewed (e.g., High and Moderate risks, unresolved mitigation, new findings), and assign a standing group responsible for review, challenge, and approval.

Capture outcomes in a structured format. For every risk discussed, record any changes in ownership, scoring, or response strategy, including mitigation deadlines or reassessment dates.

Most tools today can track open risks, but they can’t connect those risks to real control data, asset context, or governance workflows. To run effective reviews, teams need a platform that ties each risk to a control group, assigns owners, tracks response deadlines, and flags risks for follow-up based on system metadata or changes in control status.

Use a NIST SP 800-30 Risk Assessment Tool

NIST SP 800-30 is hard to sustain without the right tooling, even with a control-based process in place. Most risk assessments break down in spreadsheets, inboxes, or platforms that don’t tie control data to real risk decisions. Instead, teams need a tool that automatically connects evidence, scoring, ownership, and system context to manage and continuously monitor the process at scale.

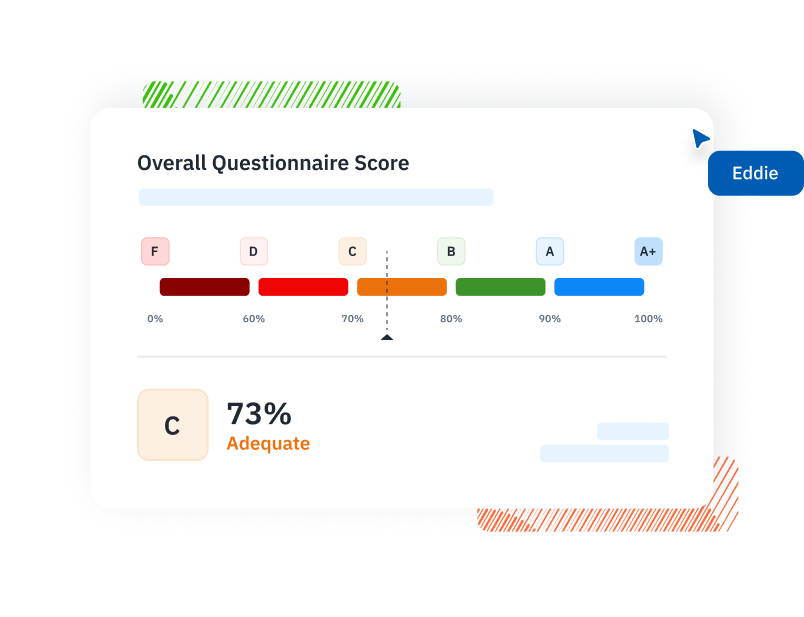

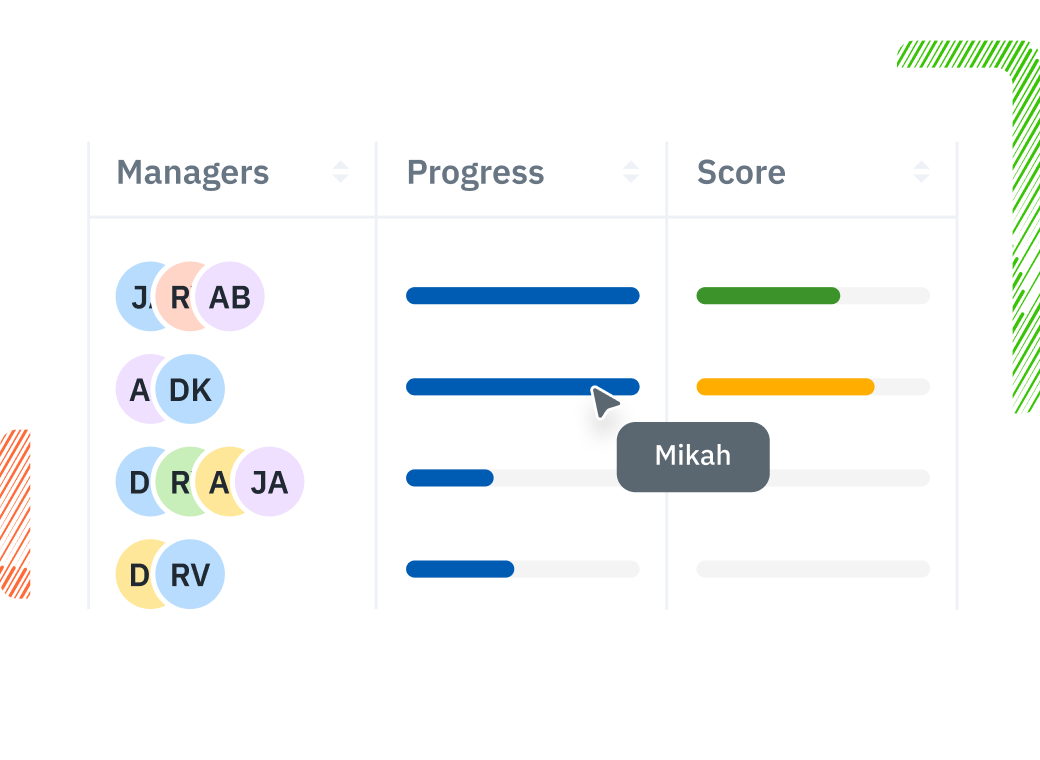

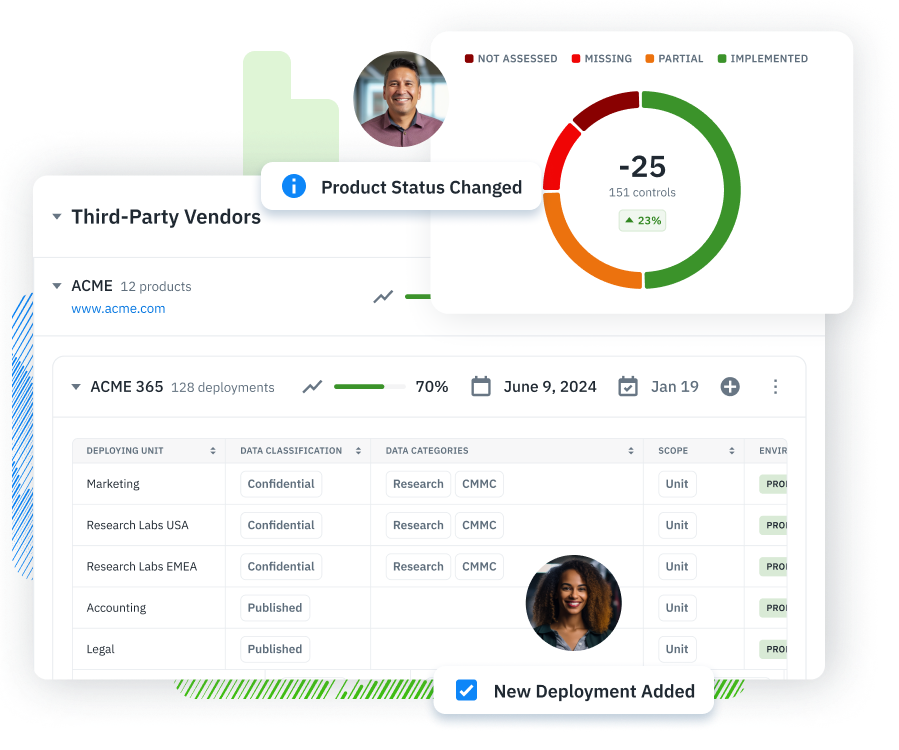

Isora GRC is a risk assessment platform built to support structured, control-based workflows. It helps teams collect evidence, analyze control gaps, generate risk entries, and track decisions, without losing context between systems, owners, or frameworks. Here’s how a risk assessment platform like Isora GRC can help:

Centralize asset, control, and risk data. Store asset metadata, control mappings, system context, and questionnaire responses in a single environment to eliminate manual duplication and enable role-specific assignments.

Automate risk creation from control gaps. Use logic-based workflows to turn missing or partially implemented controls into structured risk entries. Select from pre-filled fields like likelihood, impact, and ownership based on system tags and assessment logic.

Track response status across the lifecycle. Log mitigation actions, document exceptions, assign deadlines, and surface overdue items for follow-up. Support quarterly review cycles and audit preparation with up-to-date, filterable risk data.

The result is a NIST SP 800-30 risk assessment process that’s easier to run, explain, and scale.

How to Simplify NIST 800-30 with Isora GRC

Most teams struggle to implement NIST SP 800-30 because the framework assumes a level of coordination, consistency, and context that’s hard to maintain and impossible at scale. The only way to make the process repeatable is to approach it using a control-based model. And the only way to make that method sustainable is with the right platform.

Isora GRC is built to help teams implement NIST SP 800-30 using control implementation data. It centralizes asset context, control frameworks, questionnaire responses, and risk decisions into a single, structured system. It also enforces the connections that make the control-based approach work: every risk entry linked to a control gap, every response tracked to an owner, every change tied to system metadata.

Here’s exactly how Isora GRC maps to a control-based NIST SP 800-30 risk assessment model.

| NIST SP 800-30 | Control-Based Model | Isora GRC |

| Threat Source | Implied by missing or partially implemented controls | Prebuilt logic + Questionnaires |

| Threat Event | Implied by missing or partially implemented controls | Risk register with rationale fields |

| Vulnerability | Implied by missing or partially implemented controls | Questionnaire response mapping to risk register |

| Predisposing Conditions | Captured via system metadata | Asset inventory fields |

| Security Control | Object of assessment | Control-aligned questionnaires with framework templates |

| Likelihood / Impact | Scored using system context and gap severity | Customizable risk matrix + scoring fields |

| Risk | Structured as control-linked, scored entries | Centralized risk register with audit trail |

| Risk Response | Documented via remediation or acceptance with owners and deadlines | Response logging, status tracking, and exceptions management |

NIST SP 800-30 FAQs

How long does it take to complete a NIST SP 800-30 risk assessment?

A NIST SP 800-30 risk assessment can take anywhere from several days to several weeks, depending on the number of systems in scope and how the assessment is managed. Manual assessments typically involve emailing spreadsheets, conducting interviews, and consolidating inputs by hand, which slows everything down.

But with Isora GRC, teams complete assessments faster using pre-mapped control sets, role-based questionnaires, and automated risk scoring. As soon as a control gap is identified, Isora automatically generates a risk entry and assigns ownership, without manual consolidation or review.

Can NIST SP 800-30 assessments be automated?

Yes, NIST SP 800-30 risk assessments can be automated with the right GRC platform. With a tool like Isora GRC, teams can distribute role-specific questionnaires to collect evidence directly from system owners, vendors, and control implementers and identify missing or partially implemented controls.

From there, Isora automatically generates a register entry for each risk that includes the control reference, affected system, likelihood and impact scores, and assigned owner. The result is a risk assessment process that consistently produces reliable results.

When should I move from spreadsheets to a dedicated risk assessment tool for NIST SP 800-30?

Make the move from spreadsheets to a dedicated tool when NIST SP 800-30 risk assessments start to involve multiple systems, teams, or recurring review cycles. Opt for a tool like Isora GRC to support control-based risk assessments with structured workflows in place of static templates. It collects evidence via questionnaires, automatically maps risk to specific control gaps and system metadata, and tracks reassessment and risk mitigation over time.

How can I scale SP 800-30 across multiple business units or subsidiaries?

Scaling NIST SP 800-30 across business units requires a shared risk model, consistent control mappings, and repeatable workflows, all of which are difficult to manage manually. Instead, a solution like Isora GRC supports control-based risk assessments by design.

With Isora, administrators can define control frameworks centrally and apply them to systems automatically based on attributes like business unit, system type, or data classification. Each participant receives a questionnaire tailored to their control responsibilities to keep risk scores consistent. As respondents complete assessments, administrators can monitor a single dashboard for individual status updates and risk posture across teams.

How does NIST SP 800-30 integrate with third-party risk management programs?

NIST SP 800-30 supports third-party risk management by applying the same risk assessment process to vendors and service providers as internal systems. Teams define the scope, assign a control set based on vendor access or data type, and evaluate responses to identify risk.

A tool like Isora GRC makes the third-party security risk management process manageable at scale. Teams can send structured questionnaires to vendors and track responses all in one place, while Isora generates risk entries directly from control gaps to keep third-party risks on the radar.

What risk metrics should I track for visibility across all risk assessments?

The most important risk metrics to track for visibility across NIST SP 800-30 risk assessments are risk exposure, follow-up activity, and assessment coverage. These metrics help risk owners monitor what’s unresolved, who’s responsible, and which systems need updated inputs.

Useful examples include:

- Number of open risks by severity

- Percentage of risks without assigned owners

- Number of risks past their mitigation deadlines

- Count of accepted risks nearing reassessment

- Systems that have not been assessed within the current review cycle

Isora GRC tracks these metrics automatically using questionnaire responses, control status, and system metadata. It shows teams exactly what’s missing, what’s overdue, and what’s changed to keep the risk register both current and complete.

How do I align NIST SP 800-30 with ISO/IEC 27001?

To align NIST SP 800-30 with ISO/IEC 27001, apply the risk assessment process to the control framework used in your ISO 27001 program, such as ISO 27001 or NIST SP 800-53. ISO 27001 calls for a structured approach to identifying and treating information security risks, but it doesn’t prescribe a specific method. SP 800-30 provides that approach.

Isora GRC makes this alignment easier by letting teams assess any control framework using a consistent, structured process. With Isora, teams can collect evidence, analyze gaps, and score risks across ISO and NIST frameworks without duplicating work.

How do I sync risk assessments to Jira, ServiceNow, or other remediation tools?

The best way to link NIST SP 800-30 risk assessments to remediation workflows is with a GRC platform that supports task creation and integration. A tool like Isora GRC converts risk register entries into remediation items and automatically syncs them to Jira and ServiceNow. With Isora, teams can track progress from a single dashboard with complete visibility across systems and teams.

NIST SP 800-30 Key Terms

NIST SP 800-30 introduces a set of terms to support the risk assessment process. They define the relationship between threats, vulnerabilities, impact, and decision-making. Understanding each one is key to implementing SP 800-30 effectively, especially in control-based models that map evidence, not hypotheticals, to risk.

Here are the key terms defined in NIST SP 800-30 and how they apply to modern, scalable risk management programs.

Risk

Risk is the potential for harm when a threat exploits a vulnerability, typically expressed as a combination of likelihood and impact.

In NIST SP 800-30, risk is a function of five elements: Threat Source, Threat Event, Vulnerability, Predisposing Conditions, and Impact. In control-based assessments, teams treat each verified control gap as a risk and enter it into a structured register with contextual scoring.

Risk Assessment

A risk assessment identifies, estimates, and prioritizes risks to operations, assets, individuals, or other organizations. Risk assessments support decisions about security controls, mitigation strategies, and risk acceptance.

Threat Source

A threat source is any actor, condition, or circumstance with the potential to cause harm.

NIST categorizes threat sources as: adversarial (e.g., cybercriminals, insiders), accidental (e.g., user error), structural (e.g., hardware failure), or environmental (e.g., natural disasters). In control-based assessments, threat sources are inferred from missing or weak controls. For example, a missing MFA control implies a threat from credential theft.

Threat Event

A threat event is the specific action a threat source might carry out, like unauthorized access or data exfiltration. It connects the existence of a threat source to the exploitation of a vulnerability.

In control-based assessments, threat events are modeled based on what could happen if a control fails. For example, no backups = data loss; no logging = undetected breach.

Vulnerability

A vulnerability is a weakness in a system or control that a threat event could exploit. This includes misconfigurations, unpatched software, missing controls, or weak processes.

Control-based assessments treat any control marked as “not implemented or “partially implemented” as a vulnerability.

Predisposing Condition

Predisposing conditions are environmental factors that affect the likelihood of a threat event succeeding. Examples include: internet exposure, shared administrative accounts, infrequent patching, and lack of monitoring or redundancy.

Control-based models collect this information during asset inventory and use it to inform likelihood scoring.

Likelihood

Likelihood is the estimated probability that a threat event will occur. In NIST SP 800-30, organizations define their own qualitative or quantitative scales.

Likelihood ratings in control-based assessments are based on exposure, threat activity, predisposing conditions, and historical data.

Impact

Impact refers to the consequences of a successful threat event, such as financial loss, system downtime, data breach, or regulatory violation. Like likelihood, impact scores use scales defined during assessment preparation.

Adverse impact is the negative effect that results from a realized risk and can affect the confidentiality, integrity, or availability of systems and data, or disrupt operations and damage reputation.

In control-based assessments, impact is evaluated based on system criticality, data sensitivity, and business function.

Risk Model

A risk model provides the structure for combining different elements (i.e., likelihood, impact, predisposing conditions) into a consistent risk rating. NIST SP 800-30 lets organizations select which model to use, but all risk assessments must document their approach.

Control-based risk management programs use standard models (e.g., High/Moderate/Low) to keep scores consistent across systems and teams.

Risk Response

A risk response is the actions taken to address an identified risk. NIST SP 800-30 includes four response strategies:

- Mitigate: Reduce the likelihood or impact

- Accept: Acknowledge risk without action

- Transfer: Shift responsibility (e.g., via insurance, vendors)

- Avoid: Discontinue the activity or system

Each control-based risk entry should include a documented response type, rationale, and status.

Risk Factors

Risk factors are variables that influence the likelihood and impact of an event. They support consistent scoring and prioritization, and include: threat capability, control effectiveness, exposure, and historical trends.

Risk Framing

Risk framing establishes the assumptions, constraints, risk tolerance, and scoring definitions that shape the assessment process. It helps the entire organization understand risk and communicate about it consistently.

Risk Determination

Risk determination is the process of analyzing the available data to assign a likelihood, impact, and overall risk rating. It connects the evidence collected during the assessment to a structured decision that supports prioritization and response.

Information Security Risk

Information security risk is a specific subset of risk tied to the loss of confidentiality, integrity, or availability of information systems. It’s the core focus of NIST SP 800-30 and supports broader risk management as outlined in NIST SP 800-39.

Risk Assessment Report

The risk assessment report documents the purpose, scope, methodology, findings, and conclusions of an assessment. It should include identified risks, likelihood and impact ratings, assumptions, limitations, and confidence levels, and be tailored to different stakeholders to help them make the most well-informed decisions.

This content is for informational purposes only and does not constitute legal or compliance advice. See our full disclaimer.